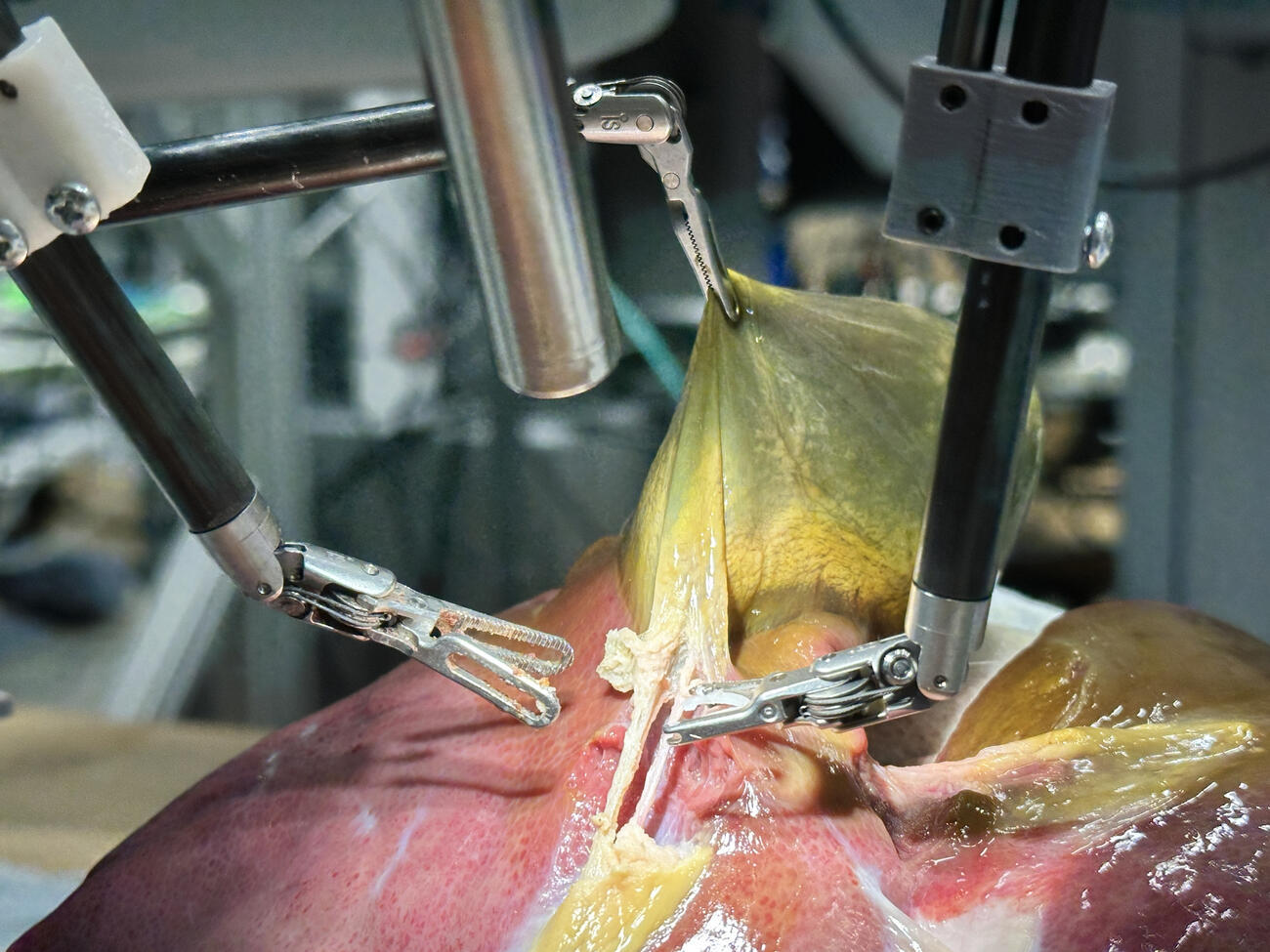

A robot trained on videos of surgeries performed a lengthy phase of a gallbladder removal without human help. The robot operated for the first time on a lifelike patient, and during the operation, responded to and learned from voice commands from the team—like a novice surgeon working with a mentor.

The robot performed unflappably across trials and with the expertise of a skilled human surgeon, even during unexpected scenarios typical in real life medical emergencies.

See the part that I dont like is that this is a learning algorithm trained on videos of surgeries.

That’s such a fucking stupid idea. Thats literally so much worse than letting surgeons use robot arms to do surgeries as your primary source of data and making fine tuned adjustments based on visual data in addition to other electromagnetic readings

Yeah but the training set of videos is probably infinitely larger, and the thing about AI is that if the training set is too small they don’t really work at all. Once you get above a certain data set size they start to become competent.

After all I assume the people doing this research have already considered that. I doubt they’re reading your comment right now and slapping their foreheads and going damn this random guy on the internet is right, he’s so much more intelligent than us scientists.

Theres no evidence they will ever reach quality output with infinite data, either. In that case, quality matters.

No we don’t know. We are not AI researchers after all. Nonetheless I’m more inclined to defer to experts then you. No offence, (I mean there is some offence, because this is a stupid conversation) but you have no qualifications.

It’s less of an unknown and more of a “it has never demonstrated any such capability.”

Btw both OpenAI and Deepmind wrote papers proving their then models would never approach human error rate with infinite training. It correctly predicted performance of ChatGPT4.

Care to elaborate why?

From my point of view I don’t see a problem with that. Or let’s say: the potential risks highly depend on the specific setup.

Imagine if the Tesla autopilot without lidar that crashed into things and drove on the sidewalk was actually a scalpel navigating your spleen.

Absolutely stupid example because that kind of assumes medical professionals have the same standard as Elon Musk.

Elon Musk literally owns a medical equipment company that puts chips in peoples brains, nothing is sacred unless we protect it.

Being trained on videos means it has no ability to adapt, improvise, or use knowledge during the surgery.

Edit: However, in the context of this particular robot, it does seem that additional input was given and other training was added in order for it to expand beyond what it was taught through the videos. As the study noted, the surgeries were performed with 100% accuracy. So in this case, I personally don’t have any problems.

I actually don’t think that’s the problem, the problem is that the AI only factors for visible surface level information.

AI don’t have object permanence, once something is out of sight it does not exist.

If you read how they programmed this robot, it seems that it can anticipate things like that. Also keep in mind that this is only designed to do one type of surgery.

I’m cautiously optimist.

I’d still expect human supervision, though.