Well according to the supreme court he could just order Seal Team Six to kill Trump as long as he called it an “official act”.

But Dark Brandon never came…

Three raccoons in a trench coat. I talk politics and furries.

Other socials: https://ragdollx.carrd.co/

Well according to the supreme court he could just order Seal Team Six to kill Trump as long as he called it an “official act”.

But Dark Brandon never came…

doesn’t it follow that AI-generated CSAM can only be generated if the AI has been trained on CSAM?

Not quite, since the whole thing with image generators is that they’re able to combine different concepts to create new images. That’s why DALL-E 2 was able to create a images of an astronaut riding a horse on the moon, even though it never saw such images, and probably never even saw astronauts and horses in the same image. So in theory these models can combine the concept of porn and children even if they never actually saw any CSAM during training, though I’m not gonna thoroughly test this possibility myself.

Still, as the article says, since Stable Diffusion is publicly available someone can train it on CSAM images on their own computer specifically to make the model better at generating them. Based on my limited understanding of the litigations that Stability AI is currently dealing with (1, 2), whether they can be sued for how users employ their models will depend on how exactly these cases play out, and if the plaintiffs do win, whether their arguments can be applied outside of copyright law to include harmful content generated with SD.

My question is: why aren’t OpenAI, Google, Microsoft, Anthropic… sued for possession of CSAM? It’s clearly in their training datasets.

Well they don’t own the LAION dataset, which is what their image generators are trained on. And to sue either LAION or the companies that use their datasets you’d probably have to clear a very high bar of proving that they have CSAM images downloaded, know that they are there and have not removed them. It’s similar to how social media companies can’t be held liable for users posting CSAM to their website if they can show that they’re actually trying to remove these images. Some things will slip through the cracks, but if you show that you’re actually trying to deal with the problem you won’t get sued.

LAION actually doesn’t even provide the images themselves, only linking to images on the internet, and they do a lot of screening to remove potentially illegal content. As they mention in this article there was a report showing that 3,226 suspected CSAM images were linked in the dataset, of which 1,008 were confirmed by the Canadian Centre for Child Protection to be known instances of CSAM, and others were potential matching images based on further analyses by the authors of the report. As they point out there are valid arguments to be made that this 3.2K number can either be an overestimation or an underestimation of the true number of CSAM images in the dataset.

The question then is if any image generators were trained on these CSAM images before they were taken down from the internet, or if there is unidentified CSAM in the datasets that these models are being trained on. The truth is that we’ll likely never know for sure unless the aforementioned trials reveal some email where someone at Stability AI admitted that they didn’t filter potentially unsafe images, knew about CSAM in the data and refused to remove it, though for obvious reasons that’s unlikely to happen. Still, since the LAION dataset has billions of images, even if they are as thorough as possible in filtering CSAM chances are that at least something slipped through the cracks, so I wouldn’t bet my money on them actually being able to infallibly remove 100% of CSAM. Whether some of these AI models were trained on these images then depends on how they filtered potentially harmful content, or if they filtered adult content in general.

I’m pretty sure you press B to blow.

There are several problems with these arguments.

[…] it concluded that if you do a lot of fine tuning then it can summarize news stories in a way that six people (marginally better than n=1 anecdote in the first link, I guess?) rated on par with Amazon mturk freelance writers. […]

It concluded quite the opposite actually. From the “Instruction Tuned Models Have Strong Summarization Ability.” section:

Across the two datasets and three aspects, we find that the zero-shot instruction-tuned GPT-3 models, especially Instruct Curie and Davinci, perform the best overall. Compared to the fine-tuned LMs (e.g., Pegasus), Instruct Davinci achieves higher coherence and relevance scores (4.15 vs. 3.93 and 4.60 vs. 4.40) on CNN and higher faithfulness and relevance scores (0.97 vs. 0.57 and 4.28 vs. 3.85) on XSUM, which is consistent with recent work (Goyal et al., 2022).

You might be confusing instruction-tuning with fine-tuning for text summarization. Instruction tuning involves rewarding a model based on the helpfulness of its responses in a user-assistant setting, and it’s the industry standard ever since the first ChatGPT showed its effectiveness.

Also they actually recruited thirty evaluators from MTurk, and six writers from Upwork (See “Human Evaluation Protocol” and “Writer Recruitment”).

Their conclusions are also consistent with the study you linked to, since which they fine-tuned the Mistral and Llama models in an attempt to generate better summaries but the evaluators still rated them lower to the human summaries. Though I’m not sure that you will be convinced by this study either since, as they state in the “PHASE 3 – FINAL ASSESSMENT” section:

ASIC engaged five business representatives (EL2 level staff across two business teams) to assess both the human and AI generated summaries. Each assessor was assigned one submission to read and rate the two associated summaries - labelled A and B.

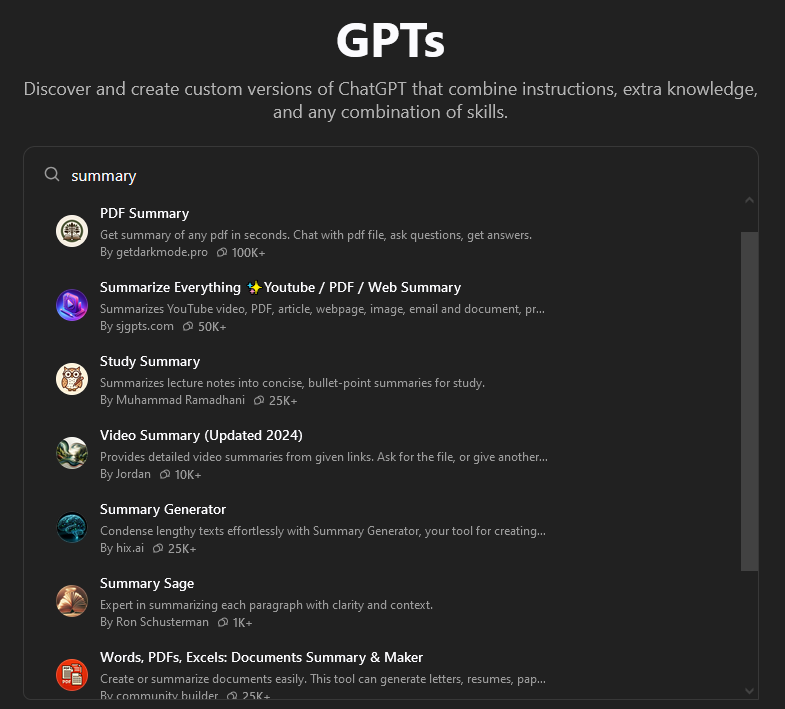

Even putting all of this aside, you can actually use custom ChatGPTs that have been fine-tuned specifically to write summaries and test them for yourself if you want:

And they also noted that this preference for how the LLM summarized was individual, as in blind tests some of them still just disliked it. There are leagues and leagues of room between that and “summarizes better than humans.”

The exact same thing can be said about the lower scores in the study you linked to, so what is the exact threshold? Would you only trust an AI to summarize things if 100% of humans liked it? Besides, even if you think the best model in the study was still not good enough, there are other, even better models that have been published since then, like the ones at the top of the aforementioned leaderboards, and others like GPT-4o, OpenAI o1 and OpenAI o3.

An LLM will tell you anything and phrase it with enough confidence that someone with no expertise on a subject won’t know the difference.

That’s why I linked to the first article where they specifically asked an actual lawyer to evaluate summaries of legal texts written by LLMs and interns - and as we can see he thought the AI was better.

My problem with LLMs is that it is fundamentally magic-brained to trust something without the power to reason to evaluate whether or not it’s feeding you absolute horseshit. With a human being editing Wikipedia, you trust the community of other volunteers who are knowledgeable in their field to notice if someone puts something insanely wrong in a Wikipedia article.

Whenever I’ve gotten into debates about the philosophy of AI and its relation to things like art, reason and consciousness, the arguments I’ve seen always end up being rather inconsistent and condescending, so I’m not even going to get into that. However I will point out that if we take the general definition of reasoning to mean “drawing logical conclusions through inference and extrapolations based on evidence”, the Wikipedia pages on LLMs, OpenAI o3 and “commonsense reasoning” explicitly describe AIs as reasoning. You’re welcome to disagree with this assessment, but if you do I hope we can then agree that, as I stated previously, Wikipedia contributors and their sources aren’t always reliable.

But sure, let’s put that aside and assume that reasoning is a magical aspect of the human brain that inherently excludes AI, so LLMs simply can’t reason… So what?

AlphaFold can’t reason, but it still can predict the structure of proteins better than humans, so it would be naive to not use it simply because it doesn’t reason. In the same vein, even if you want to conclude that LLMs can’t reason this doesn’t change the fact that they are useful tools, and perform either equal to or even better than humans in many tasks, including summarizing text.

LLMs are, for all intents and purposes, just really complicated functions that model some data distribution we give to it. Language obviously has a predictable distribution since we don’t speak/write randomly, so given proper data and training there’s no reason to believe that an AI can’t model that even better than humans. Hell, we don’t even need to get so conceptual and broad with these arguments, we can just look at the quantitative results of these models, and assess their usefulness ourselves by simply using them.

Again, I don’t trust everything these AIs generate, there are things for which I don’t use them, and even when I do sometimes I just don’t like their answers. But I see no reason to believe that they are inherently more harmful than humans when it comes to the information they generate, or that even in their current state that they’re dangerously inaccurate. If nothing else I can just ask it to summarize a Wikipedia page for me and be confident that it’ll be accurate in doing so - though as the links I mentioned demonstrate, and as you may have come to believe after considering Wikipedia’s assessment of AI reasoning, the Wikipedia contributors and their sources aren’t 100% reliable.

We both know that humans fall for and say absolute horseshit. Heck, your comment is a good example of this, where you moved the goalpost again, failed to address or outright ignored many of my arguments, and didn’t properly engage with any of the sources cited, even your own.

If you just dislike AI on principle because this technology inherently bothers you that’s fine, you’re entitled to your opinion. But let’s not pretend that this is because of some logical or quantifiable metric that proves that AI is so dangerous or bad that it can’t be used even to help university students with some basic tasks.

It’s bad at that, because effective summarization requires an understanding of the whole, which AI doesn’t have.

LLMs can learn skills beyond what’s expected, but of course that depends on the exact model, training data and training time (See concepts like ‘emergence’ and ‘grokking’).

Currently the models tested in the study you mention (Llama-2 & Mistral) are already pretty outdated compared to other LLMs that lead the rankings. Indeed, research looking at the summarization capabilities of other models suggests that human evaluators rate them equal to or even better than human summarizers.

The difference between what you’re doing and what people were doing 10 years ago is that what they were doing was referencing text written by people with an understanding of the subject, who made specific choices on what information was important to convey, while AI is just glorified text prediction.

Well that’s a different argument from the first commenter, but to answer your point: The key here is trust.

When I use an AI to summarize text, reword something or write code, I trust that it’ll do a decent job at that - which is indeed not always the case. There were times when I didn’t like how it wrote something, so I just did it myself, and I don’t use AI when researching or writing something that is more meaningful or important to me. This is why I don’t use AI in the same way as some of my classmates, and the same is true for how I use Wikipedia.

When using Wikipedia we trust that the contributors who wrote the information on the page didn’t just nitpick their sources and are accurately summarizing and representing said sources, which sometimes is just not the case. Even when not being infiltrated by bad actors, humans are just flawed and biased so the information on Wikipedia can be slanted or out of date - and this is not even getting into how the sources themselves are often also biased.

It’s completely fair to say that AI can’t always be trusted - again, I’m certainly not always satisfied with it myself - but the same has always been true of other types of technology and humans themselves. This is why I think that even in their current, arguably still developmental stage, LLMs aren’t more harmful than technological changes in information we’ve seen in the past.

AI is ultimately just a tool, and whether it’s beneficial or detrimental depends on how you use it.

I’ve seen some of my classmates use it to just generate an answer which they copy and paste into their work, and yeah, it does suck.

I use it to summarize texts that I know I won’t have time to read until the next class, create revision questions based on my notes, to check my grammar or rephrase things I wrote, and sometimes I use Perplexity to quickly search for some information without having to rely on Google, or having to click through several pages.

Truly it isn’t much different from what we used to do around 2000-2015, which was to just Google things and mainly use Wikipedia as a source. You can just copy and paste the first results you find, or whatever information is on Wikipedia without absorbing it, or you can use them to truly research and understand something. Lazy students have always been around and will continue to be around.

Honestly as your run-of-the-mill introvert I wouldn’t even go to a party

He might even pen some irritated tweets!

Didn’t some country already try this and fail miserably?

Edit: Oh yeah, El Salvador invested $150M in bitcoin only for it to lose half its value lol

Edit 2: lmao at the replies. For those of you who haven’t read the Wikipedia article on this, here’s the gist of it:

Salvadorian president Bukele forces businesses to accept BTC as legal tender, sets aside $150M in cash to back up his BTC plans, and offers $30 to anyone who signs up to a government-backed digital BTC wallet. Most Salvadorians never used it, and most who did just spend their $30 and leave. Less than 0.0001% of financial transactions use this BTC digital wallet, and most Salvadorians disapprove of Bukele’s decisions, with >70% having little or no confidence in BTC, and 9/10 not even understanding what it is.

Bukele announces a “Bitcoin City”, which leads to El Salvador’s overseas bonds to fall by 30%.

In 2022, because of the BTC crash, the Salvadoran national reserves lost $22M.

By 2022 only 20% of businesses were actually using BTC, and only 3% thought it was actually valuable. By that point El Salvador’s BTC had lost half of its value, and Bukele responded to its volatility by “buying the dip” like a maniac, with many economists predicting the country would likely default on its debt. And in usual right-wing fashion he cut public spending to make up for his incompetence, including water infrastructure and public services in some municipalities.

Finally, after all of this bullshit, in March 2024 El Salvador’s BTC holdings stood at a 50% profit. Now that it’s valued at >$100K their profit is higher, though by how much I’m not sure. Bukele still hasn’t sold the BTC for some reason.

If you look at all of this and genuinely think that the recent (and undoubtedly temporary) increase in the value of Bitcoin makes this whole thing a “Big Chungus W win for Bitcoin” or whatever, I strongly urge you to stay away from crypto and any casinos for your own sake. I assure you that there are plethora of better ways to spend the time of government employees and taxpayer money, and you deserve better than whatever faux-utopia crypto bros have sold to you. Actually investing in the country’s infrastructure and economy, or even something like Norway’s sovereign wealth fund, are much better ways to improve the economy without some long-term gamble that causes citizens to suffer.

He was probably KRHAMAS

Meh, we get a new one of those every year now. They’ll have to try harder to impress me.

Really it’s going to depend on the specific product and where/how it’s produced, but of course as with anywhere else a lot of our stuff is imported from other countries, so since the Brazilian Real lost a lot of its value relative to other currencies in the past few years things have gotten a lot more expensive.

This is obviously not an exact or thorough comparison, but just to give you an idea: The minimum and median wages are, respectively (assuming the usual 40h/week of work):

U.S. - $1.160 | $4.949

Brazil - R$1.412 ($231,86) | R$3.123 ($512,82)

I could be completely wrong but the cheapest USB-3 phone charger I saw on Amazon was $5, so 0.43% of the minimum wage and 0.1% of the median wage, while in Brazil’s main online shop (Mercado Livre) the cheapest one I saw was R$32, or 2.3% of the minimum wage and 1% of the median wage, which seems about right considering the dollar is about R$6 right now.

A new Nintendo Switch Lite costs $200, while here in Brazil the cheapest one I could find was R$1400, so almost the entirety of our minimum wage.

Point is, most things, and especially electronics, are expensive as heck nowadays.

Well, he ain’t wrong.

I feel bad for Americans, but a selfish part of me kind of hopes that the dollar crashes so that electronics become cheaper here in Brazil.

I heard that he’s an ethereal being from another dimension that has already faded away from our plane of existence so the police is wasting its time looking for him and should close the case.

well at least you wash your hands

Fox host says he ‘hasn’t washed hands in 10 years’

Many Americans don’t always wash their hands after going to the bathroom

Eugh. Raw face is just gross.

He’s also just explicitly said that he wants to be a dictator, but that apparently isn’t a turn-off for much of his base, and in fact many of them see it as a positive: https://apnews.com/article/trump-hannity-dictator-authoritarian-presidential-election-f27e7e9d7c13fabbe3ae7dd7f1235c72

Readers added context: