Just picked up my first Framework 13. Moves like this are why I’m increasingly trusting of their mission and vision.

Hopefully they stay private, or better yet, change their corporate charter into a cooperative. Never go public.

Still waaay too expensive :(

I’d pay it if they had a few things I’m looking for:

- Trackpoint (Thinkpad nipple)

- physical mouse buttons, including middle mouse button

Basically, I want the ThinkPad keyboard on a Framework laptop.

My main problem with those prices is not even the value for money (albeit imo there is quite a disparity here), but the financial damage when lost/stolen. For private use, I just don’t want to carry any device around that is substantially more expensive than ~1000 EUR.

And the only thing that Framework has to offer me that no one else insise the EU has, is 15+ inches without a num block and with centered touchpad. The market share of laptops with num blocks (and accordingly off-center touchpads) is infuriatingly high and tells me 99.5% of people either have a tiny left hand or do no serious typing on the keyboard.

I’m not worried about theft at all, but I hate typing on crappy keyboards with no travel and I don’t like using the trackpad unless I absolutely have to (and I have a Macbook Pro at work, which supposedly has the best trackpad, and I still don’t like using it).

FFS I was just about to buy myself one and now I’m obviously gonna have to wait until November

Oh, wait, I can just upgrade it. Nbd.

I’d prefer an AMD 9000 series because I refuse to support Nvidia, but the upgradability is still an amazing achievement. I’m glad to see Framework delivering.

It could help if AMD still manufactured discrete mobile GPUs.

Their website still lists RX 7000M & S series, but I don’t know of a single *other *laptop brand that currently offers them. There is certainly is no hint of a 9000 series mobile GPU, which is a shame. I probably won’t buy another laptop until AMD is back in the mobile GPU game. Not that they’re perfect, but they are significantly less evil than NVidia.

Most people don’t need them. The gaming and workstation laptop market is smaller than ever. The integrated graphics has been “good enough” for a while now.

Especially since the Steam Deck and derivatives mostly killed the gaming laptop niche market.

High end gaming laptops needed desktop GPUs anyway, because at least for nVidia, once you get past the **60 range, the mobile version starts getting very small jumps in performance compared to the desktop.

At some point it’s cheaper to get a gaming desktop and a cheap laptop lol

Out of curiosity, why do you refuse to support Nvidia? AMD isn’t some saint, they’re a shitty corporation just like Nvidia. They got lucky when Jim Keller saved their asses with the Ryzen architecture in the mid-2010s. They haven’t really innovated a god damn thing since then and it shows.

Edit: I get it, I get it, Nvidia is a much shittier company and I agree. I was pretty drunk last night before bed, please pardon the shots fired

Besides what was mentioned below, it’s not about making competitive products but about Nvidia being an absolute asshole since the 2000s and they got even worse ever since the crypto and AI craze started. AMD and Nvidia are both corporations but they are not even playing the same game when it comes to being anti-competitive.

There’s a reason why Wikipedia has a controversies section on Nvidia: https://en.m.wikipedia.org/wiki/Nvidia#Controversies

That list is far from exhaustive. There’s so much more about Nvidia that you should remember vividly if you were a PC gamer in the 2000s and 2010s with an AMD GPU, like:

- When they pushed developers to use an unecessary amount of tesselation because they knew tesselation performed worse on AMD

- When they pushed their Gameworks framework which heavily gimped AMD GPUs

- When they pushed their PhysX framework which automatically offloaded to CPU on AMD GPUs

- When they disabled their GPUs in their driver when they detected an AMD GPU is also present in the system

- When they were cheating in benchmarks by adding optimizations specific to those benchmarks

- When they shipped an incomplete Vulkan implementation but claimed they are compliant

Nvidia has been gimping gaming performance and visuals since forever for both AMD GPUs and even their own customers and we haven’t even gotten to DLSS and raytracing yet.

I refuse to buy anything Nvidia until they stop abusing their market position at every chance they get.

If you want one more, NVIDIA is building a multi billion dollar tech hub in Israel

If you want more, NVIDIA is bullying tech reviewers into pushing fake reviews

they’re a shitty corporation just like Nvidia

Neither of them are anyone’s friend, but claiming they’re the same level of nasty is a bit of a stretch.

Not saying that supporting the under dog isn’t good.

Just don’t think AMD is less “nasty”, the only thing stopping them is the lack of power to do so.

Right, and since they’re not dominant, they’re less nasty. If they become dominant, consider switching to whoever is the underdog at that point.

Not OC but I don’t want to deal with Nvidia’s proprietary drivers. AMD cards “just work” on Linux

Except that AMD doesn’t support HDMI 2.1 on Linux (not their fault to be fair, but still)

This may be an unpopular opinion but who cares? I’ll use DVI if I have to.

I personally don’t have a need for it, but if someone has a 4K 120Hz TV or monitor without DisplayPort that they want to use as such, it’s kinda stupid that they can’t.

yeah, but that’s the fault of the HDMI standards group. AMD cards could only support HDMI 2.1 if they closed their driver down. I guess this can’t be fixed with a DP to HDMI adapter either, right?

my opinion: displayport is superior, and if I have a HDMI-only screen with supposed 4k 120Hz support I treat it as false info.

Is that the case on mobile APUs as well? I’m pretty sure my laptop with 7840u does 4k120hz

Intel cards do, I think, so that’s a non-NVIDIA option.

yeah intel (⊙_◎)

That’s completely valid, I haven’t had issues on Linux myself with nvidia, but I know it’s definitely a thing for a lot of people.

Haven’t innovated? 3D chip stacking?

CPU companies generally don’t change their micro-architecture, especially when it works.

intel didn’t for 7 years, but they started and ended that trend.

deleted by creator

Now if I could only afford a Framework…

If I could only afford any decent gaming laptop honestly.

It’s getting harder and harder to afford high end computers. I have already decided my next new computer will be a mini desktop. They are noticeably cheaper, can be well spec’ed, and powerful with a small foot print.

now if only they sold them in my country…

OK I’m a bit confused. I have a Framework 16” that I bought earlier this year, without the GPU extension bay. I don’t care that much about the expansion bay as without it, the laptop is already huge. I have an eGPU to play on when I need it.

What upgrade options does this announcement offer to me?

I’m dissatisfied with:

- the webcam

- screen colors / brightness

- key stability on the keyboard (the keys are a bit wobbly)

- speaker sound quality (I’m not expecting the best, but something better than what it shipped with)

They are announcing a new webcam, will it be backwards compatible ?

Otherwise I’m really happy with it, I absolutely love the modular I/O, being able to swap which side the audio jack is is amazing. happy to support this endeavor of repairability

There’s new webcam, theres also an “empty” extension thing where you can put 2 nvme SSDs in place of the GPU.

They made a new webcam , but im not sure if they shipped recent models of the 16 with the new webcam or if you have to buy the new module :/

At least there’s an option at all.

That’s exactly why I like framework laptops.

for laptops, either get last or even further generation 8 core cpu and 5070/4070, or be happy with AI 300 series igpu. Buy more memory instead. You might one day want local AI/LLMs.

Problem is almost no laptop has Strix Halo. Not even the Frameworks.

And rumors are its successor APU may be much better, so the investment could be, err, questionable.

Iirc this was due to the design of the chip. Framework said the bandwidth needed for the strix halo is waaaaaaayyyyy faster than the bandwidth of the sodimms that laptops have.

Hence they made the desktop, only thing they could think of doing with those classes of apu’sIt’s just soldered LPDDR5X. Framework could’ve fixed it to a motherboard just like the desktop.

I think the problem is cooling and power. The laptop’s internal PSU and heatsink would have to be overhauled for Strix Halo, which would break backwards compatibility if it was even possible to cram in. Same with bigger AMD GPUs and such; people seem to underestimate the engineering and budget constraints they’re operating under.

That being said, way more laptop makers and motherboard makers could have picked up Strix Halo. I’d kill for a desktop motherboard with a PCIe x8 GPU slot.

So I’m going to be skeptical here. I had an older 9xx MSI laptop that was touted as replaceable and “upgradable” GPU for the next generation at the time.

That ended up as a big ol’ whoops, because replacement screwed with thermals and found that you couldn’t actually upgrade because of all kinds of reasons and resulted in a class action suit.

Just color me skeptical on these types of things.

Framework has been pretty consistent on upgradability. You can even put the newest MOBOs/CPUs in the oldest laptops since they kept the formfactor identical. They sell such mobos on their website.

GPUs a bit of a different monster since there no such thing as a standard socket, you’re bound by the manufacturer spec for pin in/out.

And that was the case with MSI laptop and Nvidia partnership when Nvidia went full Darth Vader and changed the terms of the deal.

I mean more power to them if they can actually deliver actual modules that can be upgraded and if I can actually see a generation or two of this actually working, I’ll be on board but once bitten, can’t fool me again for the time being.

The standard is PCI-e, and it is interchangeable. This is the second dedicated video card you can get in a Framework laptop, and they can be swapped out with each other. The other video card is even an AMD Radeon.

Again, that’s great if they can continue to update and release their GPU module to work with additional and future gpus. I’ll believe it when I see it be updated with the next generation of gpus because just like it said on their press release, others have tried it and failed.

This is the next generation GPU. The Radeon is a last generation model that you have been able to buy for awhile now. What you are asking for currently exists and is something you can buy on their website right now and upgrade your older laptop:

Prior Module: https://frame.work/products/16-graphics-module-amd-radeon-rx-7700s

New Module: https://frame.work/products/laptop16-graphics-module-nvidia-geforce-rtx-5070

Ah, I see. Well cool, that’s actually pretty neat then, reasonably, at least in terms of today’s ridiculous GPU market, price. Maybe they will be the ones to break the curse then and I can have a laptop that can actually treat like a desktop.

Could you not just get an eGPU dock, and do it that way?

Maybe they will be the ones to break the curse then and I can have a laptop that can actually treat like a desktop.

Nah, unfortunately they are just as beholden to the GPU makers as any of us. More than larger laptop OEMs for sure.

A future Intel Arc module may be the only hope, but that’s quite a hope.

I just got a 10L SFF desktop I can put in a suitcase, heh…

Since the cooling system is self contained in the module, you shouldn’t have that issue.

The new GPU also has the same 100w tdp as the last one

damn son, those lappies aint cheap

yeah, at these prices makes more sense to just buy a new laptop in a couple years… o well.

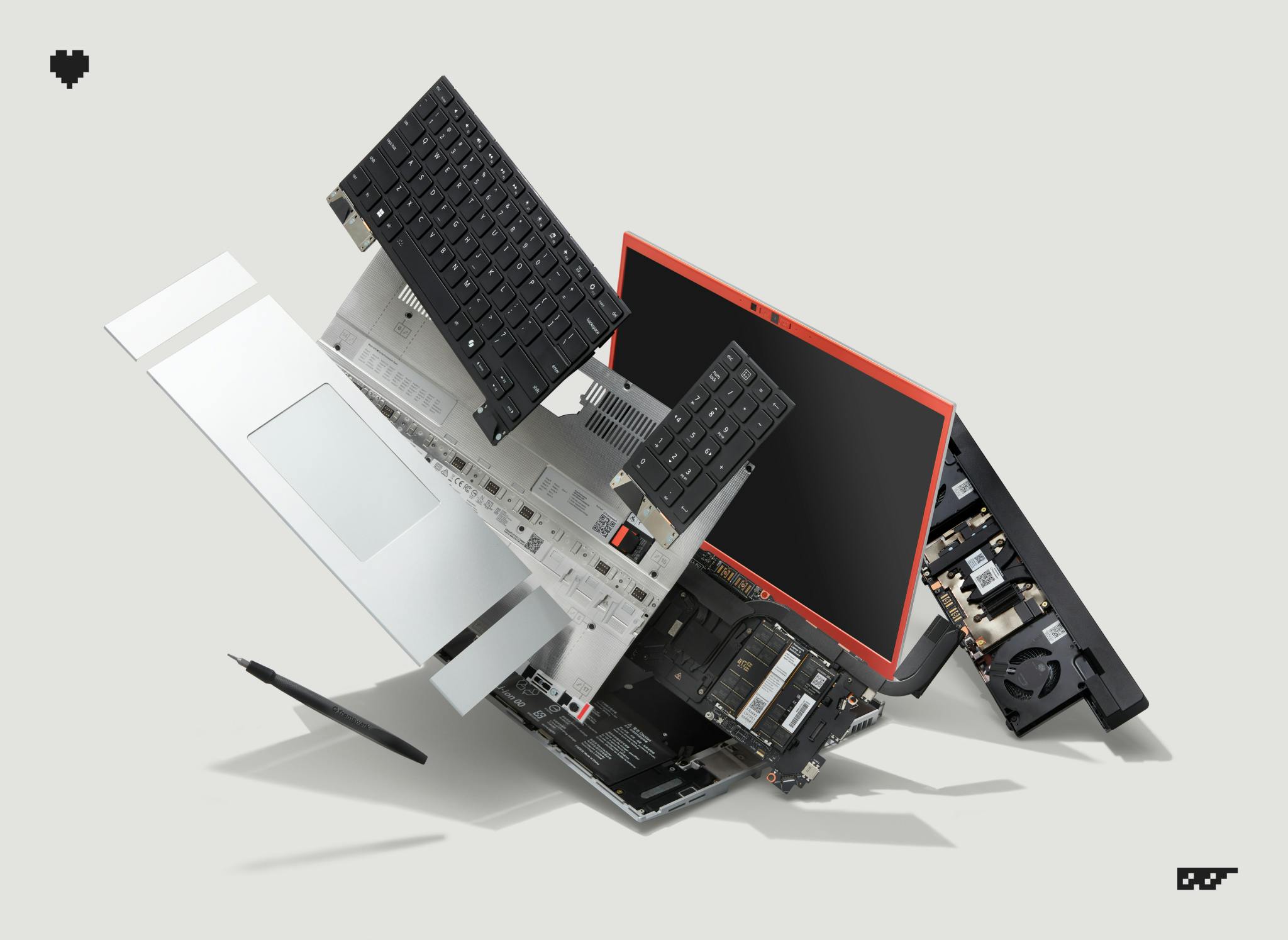

The more impressive thing is that they managed to get the Nvidia upgrade to be backwards compatible with existing Framework 16 models.

That’s the push I need to really, truly believe they’re committed to the goal of upgradablity. Too many “modular” products have come out where the “upgraded” modules were only available if you bought the newest version of the base product.

In the next year or so, I’ll probably be buying a new laptop, and this has convinced me that Framework is probably the way to go.

I’ve been rocking a Framework 16 for about a year now and would happily recommend it. It’s a bit more upfront, but I love knowing that I can fix or replace just about anything on it (pretty affordably too). It’s just so refreshing to not have to worry about dumb shit like an obscure power adapter or port forcing my laptop into an early retirement.

It’s not the lightest laptop I’ve ever had, but realistically not all that much different from my last gaming laptop. Now that I’m not a full time student anymore I could probably get away with one of the smaller models, but the form factor is pretty nice.

Overall, no major complaints!

i’ve had a framework 13 from a time before there was any other type of framework, and it’s a great laptop honestly. ive yet to do big upgrades, but just being able to repair it myself is awesome. one time i dented the chassis around where the power button was. no worries, just changed the input cover and bam 5 minutes later it’s like new.

my only complaint is that the battery life is atrocious. i heard it’s better (but still not great) on newer models tho

I have a newer gen 13 and yeah battery life is mediocre. I love literally everything else about it though so it’s ok.

I can’t remember the last time I wasn’t near an outlet though tbh.

I’ve got an HX 370 one and aside the battery, the only other complaint is the screen, max brightness isn’t much and I miss my previous laptop’s touch screen

Oh interesting, the brightness hasn’t been an issue for me but different strokes for different folks.

I’ve never had a touch screen laptop so I can’t miss it lol I bet it’s convenient sometimes though

I have a 7840U with a 55HWr battery. I can squeeze out 7 hours. If I’m power using then 5-6 is typical. With the 63WHr battery, you’ll get about 15% more time with it.

yea, that’s what i meant when talking about newer gens being better

i have a i5-1240P (with 55WHr battery) and im lucky to get 5 hours while on power saver reading PDFs

I have two Intel frameworks, and they both suck in regards to battery life

Buuut, I just have a big power bank in my backpack. Gives me at least 1 full charge when I’m on the go. And at home I just have a lighter laptop due to smaller battery

The only thing that pisses me off about framework, is their abysmal software and communication in that regard. It’s basically impossible to get them to acknowledge or fix problems in their firmware

Out of curiosity, what cpu? I had an i5-1135g7 laptop that I motherboard swapped with a Ryzen 7 5825U motherboard. The battery life on the i5 was atrocious. I got 2 hours out of it doing note taking. Maybe 3 when new and I had the full battery capacity to work with. After the motherboard swap, I got basically double the battery life in the same conditions.

(HP pavilion 15-eg050wm and then I put a 15-eh2085cl motherboard in it)

i5-1340P and i7-1260P

Both FW13

both get maybe 3 hours if I’m lucky. Although they are a couple years old now. Fresh battery got me maybe 4 when lucky.

I have a 25k power bank, so I can extend the runtime quite a bit. The “at least once” above is quite conservative. it’s probably closer to 2. and that includes using it while charging.

I heard the ryzens are a lot better regarding power, so it doesn’t surprise me that the runtime basically doubles

I’d recommend disabling boost and setting cooling to passive.

On windows, if you set maximum processor usage to 99% in advanced power plan settings, it will disable boost. You can set the cooling policy as well. Also repasting is probably beneficial. The more efficient your cooling system is, the less fan usage it will need and you’ll get better battery life as a result.

That’s what I noticed on the i5 laptop, it would kick on the fans doing basically nothing and would kill battery. When the fans were off, the estimates were higher. Also maybe disabling the P cores in both machines might be beneficial.

yup, I already have boosting disabled.

Mainly due to quite shoddy firmware code that controls the charging. Which causes wild battery flipping behavior even when using a powerful charger. It’s a long known issue, and FW is annoyingly quiet on the problem. It’s the reason I’m annoyed by their software issue communication handling

Ooof. Only time I had that issue was when I used a 35 watt laptop adapter with my old HP laptop. It wanted a 65 or 90 watt adapter.

The only downside I have seen is that GSYNC will not work. The newer display supports it, put anyone upgrading an older Framework 16 with the new NVIDIA card will have to buy the screen upgrade as well if they need GSYNC.

That’s not unexpected. Variable refresh rate (GSYNC and Freesync) has always needed the display to support it first.

Yeah, but the old display supports VRR via VESA Adaptive-Sync. Nvidia supports that as well, but not sure if their mobile GPUs don’t for built-in displays?

If it is supported, I don’t see any advantage of having Gsync vs. standard VRR.

If not that’s a shame. Pretty wasteful having to buy the same display with different firmware just to get adaptive sync working.

Will freesync work with it?

Nowadays they’re the same thing. Nvidia uses a different name because they like appropriating things, I guess.

They are not the same thing. GSYNC requires the monitor to be embedded with an NVIDIA controller.

It does not. You’re talking about the original version GSYNC which required a hw module. That’s no longer the case.

No. That’s G-SYNC compatible, G-SYNC monitors require an “NVIDIA G-SYNC processor”.

https://www.nvidia.com/en-in/geforce/products/g-sync-monitors/

“g-sync compatible” monitors are still advertised as “g-sync”. So, while you’re technically right, even though nvidia’s marketing differentiates versions, manufacturers only put that in the fine print. Also, if you go back to the original question (“Will freesync work with it?”), if gsync works, freesync works as well. Regardless of which variant of gsync you have.

Lastly, framework mentions “we’ve updated our 165Hz 2560x1600 panel to support NVIDIA G-SYNC®” but I’m not sure they’re referring to actually including a coprocessor. It most likely refers to just adding VRR support.

Yeah it pushed me to finally put in an order, got to wait till December now as I’m in the third batch.

I wanted to wait till we had proof thst the graphics card would be updatable and a better one would be available as their AMD card is a bit too lightweight for me.

I would rather it had been a better AMD card, I have a 7900 xtx in my desktop, but i will take what I can get at this point, especially as I know I can upgrade later.

Now if only Framework did that with AMD & Intel GPUs, then we’d all be balling.

Also please make it available in the East

They’ve had AMD for a few years now. No Intel one, but they do sell empty GPU module shells, so maybe someone could cut down a desktop Intel card to fit in one?

I doubt you could (well, with a level of effort 99.9999% of people world be willing to put in), power and the pcie connection would cause problems.

Yeah, I bet someone would do it for Youtube views, but you’re right, that’s too much.

That’s it, every other gaming laptop is finished. Even though I have the older CPU I can get the newest GPUs now. Nobody can claim that right now. No other company is doing this.

The other laptops arent finished yet. Framework is super expensive , even compared to other gaming laptops.

I think its worth it, but thats not the opinion of a lot of casual people.

And had i not gotten one via my job, i would not have gotten a framework 16 because of the priceWell, the idea is that you can upgrade components without replacing everything, so the initial cost is higher but the long term cost is lower.

That said, they took their time. The 1st generation is old now. The Radeon dGPU is probably weaker or on a similar level than the new Ryzen iGPU. There is no Radeon dGPU upgrade path other than “just use the old one”. They have a better upgrade cadence with the 13 inch model.

Not their fault, Radeon mobile parts are not out

What are you talking about? Of course there is newer hardware than a Radeon RX 7700. The 7900 specifically.

The CPU also has no Ryzen 395 option either which Framework source for their unmodular desktop PC.

The 7900 is not newer

The 7900 specifically.

They have to stay within the TDP. Their only option is something newer and ~100W (like the 5070).

And I’m pretty sure the 7000 series is going out of production anyway…

Also (while no 395 is disappointing), it is a totally different socket/platform, and the 395 has a much, much higher effective TDP, so it may not even work in the Framework 16 as its currently engineered. For instance, the cooling or PSU just may not be able to physically handle it. Or perhaps there’s no space on the PCB.

A new power brick is needed anyway. That’s why FW now has a much more powerful one as well.

The 395 obviously would throttle if heat or power become a problem.

If GPD can put the 395 in a handheld, Framework can put it in a 16" chassis.

Oh i know, and i agree. Im in talks with my boss to maybe upgrade the mainboard pre-maturely to the latest. Im using the lower costs as an argument haha

Surely there will be a desktop case for the old mainboard, as with the case for the 13" mainboard. Then you can to a little yoink and have yourself a good desktop PC.

The yoink is not needed. We have a policy to get a new laptop every 4 years. After that the laptop is all ours once formatted on site ( to make sure no company or customer details get leaked ). This is how my brother got my old dell xps, which he really needed for his education

Edit: apparently they are working on it, same with a case for the gpu to convert it into a e-gpu

And still no OLED screen… why Framework, why?

I got one of the latest Framework 13 a couple months ago for work, and while I’m happy about the prospects of future repairability and upgradability down the line, it’s not a great laptop given its pricepoint.

The build is subpar, with the screen flexing a ton, the keyboard and trackpad are lacklustre and pretty uncomfortable, but the worst is the screen, it’s dim, with poor colour reproduction and 3:2 is frankly not for me. And fractional scaling is a mess with XWayland, while it was much better on my 2019 XPS 13.I love what Framework are pushing for and actually achieving, but tradeoffs are very much at play. I’m hoping for an OLED screen replacement in the near future though.

The good thing: You will probably be able to swap it once they make it available.

I really don’t see it happening considering you would likely just be replacing the whole chassis. I see an OLED in the future, just not a swappable one.

You can buy individual displays directly on framework’s website and swap them into even their oldest laptop. If they make OLED versions in the future, it will be the same: a display swap.

Wow, til. Thanks homie!

I’ve yet to use an OLED monitor that didn’t make text look shitty and I’ve used $1000+ OLED displays with high ratings.

Don’t get me wrong, OLED colors and blacks are gorgeous. I love OLED.

Even my Samsung Pro whatever latest laptop with an OLED display…the text just looks off. Which was disappointing because my Samsung phone text is fine.

LG C2/3/4, also gross looking text.

Alienware OLED $750+ monitor? Text was bad.

I love OLED but I’ve yet to find one that works for productivity.

Almost all OLED displays use a different pixel layout than traditional LCD displays. And sub pixel font rendering is designed for the standard LCD layout. Depending on your OS you may be able to configure the font rendering to look better on most OLEDs. But some people are just more sensitive to this as a problem.

Yeah, unfortunately I might be one of those people. I can also see some monitors flickering which gives me a headache in sub 3 minutes.

It’s a curse. Especially with in-office pairing.

I think that’s PWM dimming vs DC dimming.

PWM dimming turns pixels on and off to make them darker. So for 50% of the brightness, it’s off 50% of the time. Higher end panels flicker much faster which helps mitigate perceived flicker. I think 500hz and above is preferred.

For DC dimming is just using voltage to control the darkness with no flickering involved.

I have a friend who has always been picky about displays, I thought he was just being nit picky

Since normally my eyes can’t distinguish between 480 and 1080 under normal circumstances and flicker goes un noticed

Aren’t phone screens AMOLED? I’m definitely not an expert, but I thought it was a variation of OLED, which would explain why text looks better.

That being said, I also have an OLED Steam Deck and I can read text on it just fine if the scaling is set correctly in the game or just browsing the web normally in desktop mode.

Ah, true, thanks for the correction.

Maybe I’ve just had bad batches of displays? I don’t know. I got 3 really nice Asus ProArts and the text clarity and colors are fantastic.

Still wish I had blacker blacks.

Yep, text is definitely not handled well on most OLED monitors (or TVs) because of their pixel substructure. It’s usually been better on Linux for me and I essentially don’t notice it anymore, but I also haven’t used Windows in years so I can’t compare.

Hmm, I am working on converting all my things over to Linux so maybe I’ll give it another shot.

Windows always has this weird ghosting going on, super odd.

Did you turn on PC-Mode with your LGs?

I use an LG nanocell TV as an pc monitor and the fonts didn’t look good until I set the HDMI input type to PC. And ofcourse you need to play around with the font rendering tools like ClearType in Windows.

OLED does not belong on a computer. Also it’s a dead end technology.

What about SIM slot

Apparently there’s some open source designs for a sim card module already. Idk if Framework is planning on making their own though. https://github.com/Zokhoi/framework-cellular-card

Presumably a Wan modern could fit in one of those little USB port things.

*caresses screen*

some day…